Johannes Sippola

Johannes is a Data Analytics Consultant who helps businesses become more data-driven. His passions include delivering insights, modeling business challenges, and improving decision-making.

Machine learning (ML) applications are already used in many businesses, especially in data or analytics-heavy industries. Whether small or large, every organization can have a lot to gain if they decide to utilize advanced analytics. For example, using an optimized supply chain will help decrease costs. Clustering customers will help to target marketing departments' efforts to the right customer group. Classifying customer churn will help target efforts to stop customers who are about to leave, which results in loss prevention and increased revenue. If next month’s orders can be estimated with a time-series or a regression forecast, that can make it easier to plan future business operations. All these are common examples of ML models.

Personally, I believe that the best advanced analytics model is the one which is the most simple and gains the most business value. You might hear that in everyday discussions people refer to AI or ML, and behind these hype terms is a simple linear regression tackling the business challenge: y = ax + b. Do not get me wrong here, if this is the case then you are lucky to overcome your business challenge with a simple solution. Finding a solution no matter how simple it is may need some help from a computer. This part of the problem-solving process can be called ML development.

Lots of enterprises have already tested out and piloted machine learning models to some extent in their organizations, but fewer companies have a mature ML production deployment readiness. Companies will gain value from a ML model when model outputs are linked to business decision pipelines and when the models are productionalized. Data science platforms and frameworks are needed to achieve an operationally excellent, efficient, reliable and secure continuous integration and continuous delivery (CI/CD) cycle for ML models.

If you are heading to Lapland for a skiing trip together with your friends, you’d rather drive an SUV than cycle. In this case, taking a bicycle represents the case where developers are not having a framework or platform for their ML models, resulting in a situation where models are running on isolated laptops or unscalable servers. Operating these models is resource-heavy, and manual work cannot be avoided, making it a long and burdensome journey. In comparison, you can have easy and fast deployments, agile production environment, and cloud technology powering the CI/CD cycle. With the right tool for the job, your analytics journey will be much smoother.

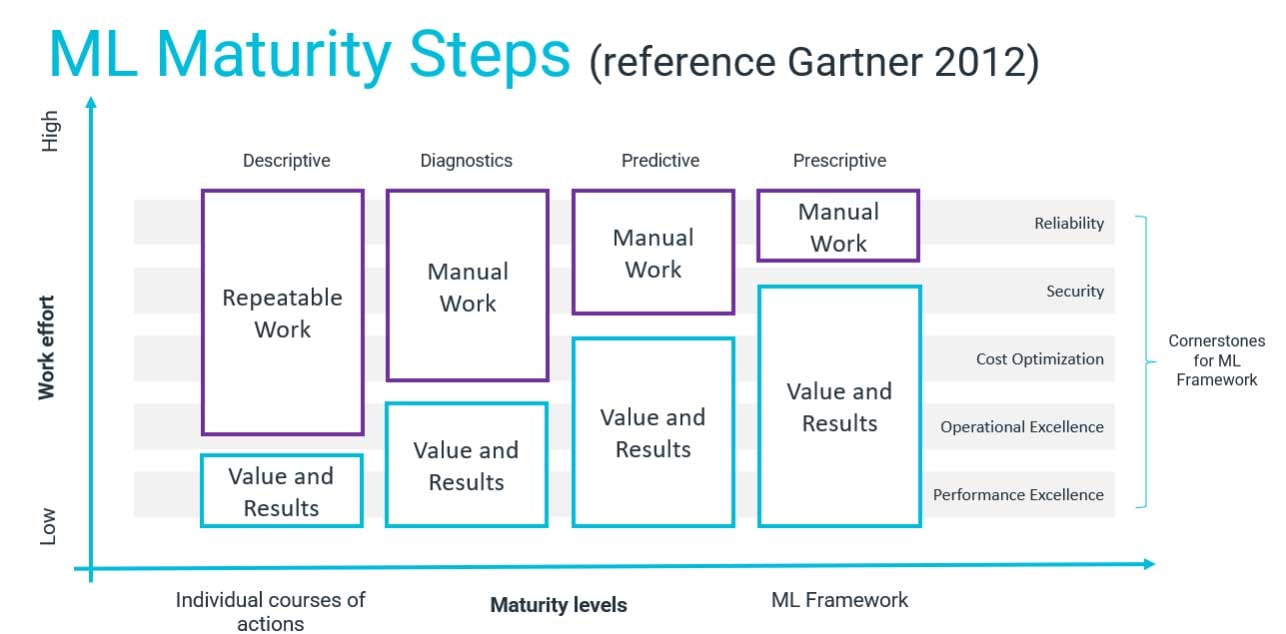

Where does your business stand in the analytics maturity steps, in the chart presented below? As you will see, more value is achieved as the maturity of ML increases. Taking steps towards mature ML development will reduce manual and repeatable work in the CI/CD cycle. However, before you can jump into the predictive and prescriptive maturity levels, the baseline needs to be in order. The data quality plays a vital role when it comes to analytics in general. Any model is only as good as the data fed into it. Read more about data quality from our blog post (note: article is in Finnish). You succeed in ML projects when analytics capability is built on top of a data platform. The good news is that ML maturity, data quality and building a data platform can be all enhanced simultaneously.

Another pitfall to avoid is taking too big a piece from the cake. Starting too big may result in long-lasting projects and business benefits that are inconvenient to realize. Starting from smaller entities with simpler algorithms will often gain quicker wins. Well defined and scoped projects will give a softer landing to ML. Expectations management is another crucial aspect to consider. ML is not a crystal ball, but it can help decision-making and in the best case can be part of automating it .

Now some businesses may say they lack concrete use cases for Machine Learning, but it's more likely they have simply failed to articulate them. Lack of communication between business stakeholders and data science teams could be another aspect standing in the way. For businesses to benefit from ML, all the stakeholders must be on the same page and ideate together. Understanding of business and ML needs to be brought together so that use cases can be identified.

Often companies have had success when piloting Machine Learning, but it is challenging to deploy the models and gain actual business value for the company. There might already be good and valuable work done in the business unit, but without a framework, data science platform and infrastructure, valuable models are left as isolated experiments into data scientists' laptops or repositories. Left here, the models cannot be linked properly to decision-making pipelines where the actual value of ML models is generated.

Deployment of models is not simple without the right tools. Model orchestration is complex: Which of the model iterations should be productionalized? What are the rules to do that? Rules need to be defined for automatic retraining of the models. Data drift, retraining and production activities need to be logged and monitored. It's essential to have a framework and methodology to control ML model versions and their performance metrics.

MLOps, or DevOps for machine learning, enables data science teams to collaborate and increase the pace of CI/CD cycle via monitoring, validation and governance of the models. Data science platforms simplify development and deployment work. A data science platform is a tool to build, train, share, deploy and manage the models. Azure Machine Learning is one great example, suitable for all skill levels.

So, why is Azure ML a good data science platform? It fosters responsible ML, meaning that models are fair and do not discriminate for or against ethnicity, gender, age, or other factors. Data is protected with differential privacy and confidential computing. During the development process experiments are registered and metrics of every iterative test run can be logged, and they are easily accessed from the experiments section of the Azure ML. Iterative and time-consuming tasks of model development can be automated with AutoML. It’s convenient to start a project by running AutoML to see which data preparation methods, algorithms and parameters ML service would choose. ML solutions can be as well developed with little coding skills in the low-code & drag-and-drop interface Azure ML Designer. To understand how data is changing over time, data drift can also be monitored in Azure ML.

We have witnessed firsthand that by giving the right tools to data scientists, standard practices are easier to follow, enabling faster ML experimentation, development and deployment. With cloud technology your production environment is automatically scalable. Typical ML Framework includes data scientist platform Azure ML Services and Azure DevOps for faster and smoother deployments and analytical engines to power heavy ML computations.

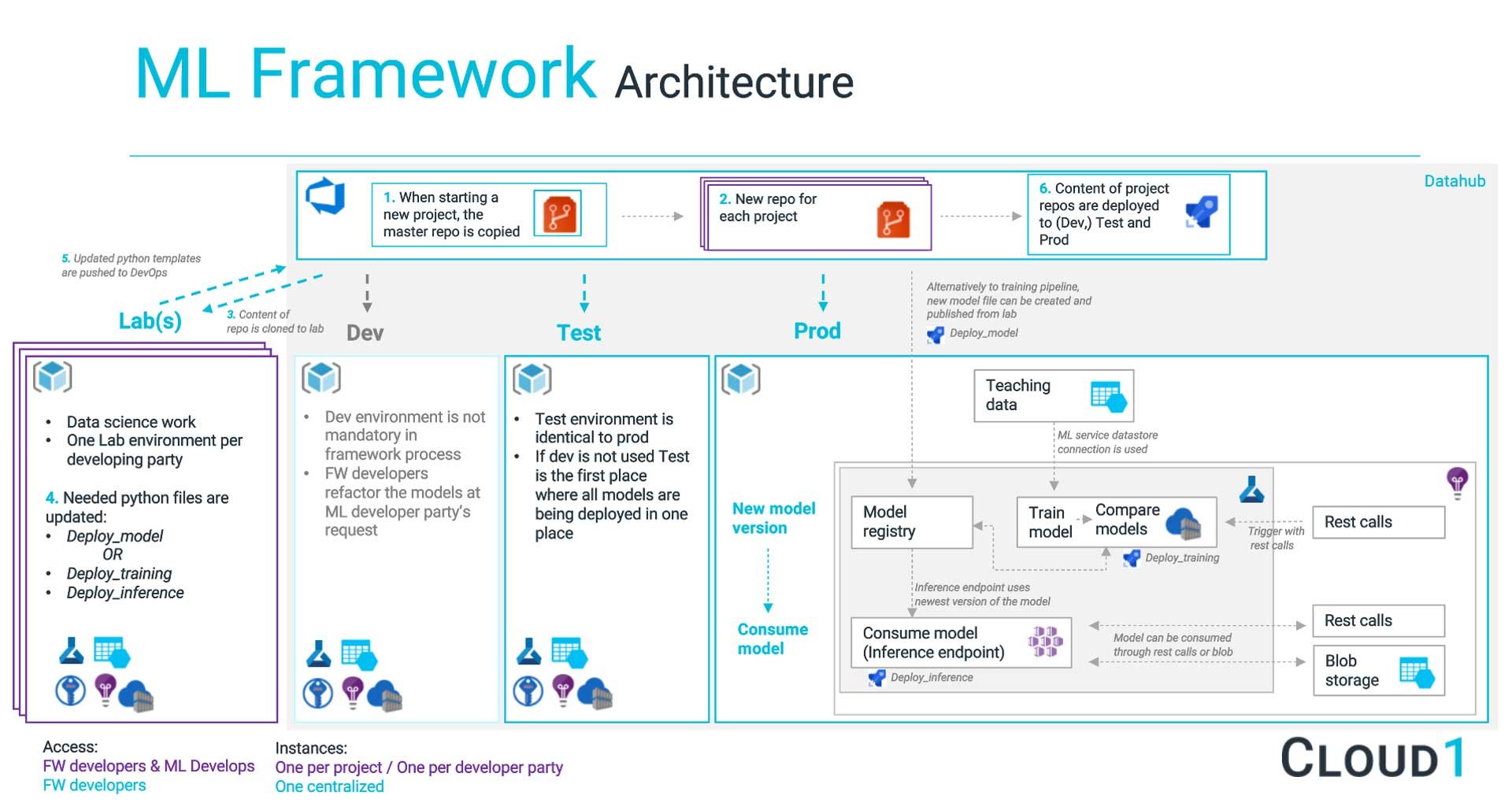

Above is a reference for our ML Framework architecture which consists of four different environments lab, dev, test and prod. In the typical framework developers start the deployment by cloning the master repo to lab. Lab environments can be in Azure ML or any IDE and there can be multiple decentralized lab environments. Lab is for ad hoc analysis, new solution research and proof of concepts. For every project a new repository is created in the Azure DevOps.

Repository includes templates, which are required in the deployment process. Train and inference (consumption of the model) sections are templates filled in by developers. In a good framework, developers do not need to know too much about the deployment as long as templates are filled and moved to production. Alternatively, an already-trained model files can be deployed without a training pipeline. Developed applications are pushed to repository. Test environment is identical to Prod and used for test purposes before actual deployment to Prod.

When deploying to Prod, Dev or Test models and script files are registered. Used data is consumed from Azure ML service storage, where datasets are as well registered. Automatic retraining of the models can be triggered through rest calls or they can be scheduled. After comparing previous models, the decision to accept or decline is made based on the predefined rules. Manual acceptance can be done as well. Applications are deployed on Azure Kubernetes Service which is managing the consumption of the models. Applications can be consumed through rest calls, or another option is to schedule the models and run them as a batch. All models and applications are fully version controlled and registered in a central register. Run logs, alerts and performance metrics of applications and their different versions can be monitored in Application Insight.

ML Framework includes not only MLOps and Data Science tools but also management methodology, automated environment establishment, model refactoring and deployment capabilities. When ML Framework materializes fast and secure CI/CD cycles, the quality of models is assured and business values from ML models are generated. With an established framework, cumbersome and boring deployment processes can easily be automated. We are honored if we can help you and your friends on your journey to Lapland.

Johannes is a Data Analytics Consultant who helps businesses become more data-driven. His passions include delivering insights, modeling business challenges, and improving decision-making.